It seems everyone is weighing in on AI these days, so I guess it’s my turn.

To be honest, I’m not entirely sure what to make of all of it just yet. We are getting inundated with AI for sure and have been for quite a while, but now that it seems to be reaching a critical mass in our collective conscious also comes all the requisite pearl clutching about it on social media, legacy media, and just about everywhere you turn. At times I am no different, often referring to Google, Meta, and the likes as our feudal tech overlords, domineering over us, watching and influencing everything we do, profiting from us — it’s a perfect metaphor as far as this medievalist historian is concerned.

For instance, there was a recent article circulating in just about every college and university department, with my own being no exception, about how students are cheating their way through college with large language models (LLMs, or the fancy name for AI) like ChatGPT. We are all wondering what to do with and about this technology and how it is affecting our students, which also means pondering what this holds for our and their future, not just in higher Ed but in general.

Likewise, many here on Substack have written about it like Rod Dreher sharing a particularly dark view from an interview with an AI tech insider, and also my friend and podcast cohost C.J. Adrien who has written about his own experiences with AI as a fiction writer. And there have even been some funny stories about it like the joke someone played by inserting Mark Zuckerberg’s voice in a crosswalk machine letting us all know how utterly screwed we are.

It certainly does feel like there’s not much anyone can do about it. From Google’s Gemini to Adobe’s AI Assistant, which tells me every time I open a document now “This appears to be a long document. Save time by reading a summary,” it feels like intrusiveness disguised as helpfulness. No thanks Adobe. I actually like reading long, thoughtful articles and don’t need everything in my life reduced to bite-sized pieces.

Even one of the feudal tech overlords, Elon Musk himself, has spent years warning about the potential dangers of AI. He calls it an existential threat if we don’t figure out how to harness it for good.

But not everyone is freaking out. For instance there is this piece by Tyler Cowen at the Free Press. And it is true historically-speaking that new technologies always come with concerns alongside possible benefits. People thought similar things when the printing press first showed up in 15th century Europe. “Yay, cheap printed material which can help democratize knowledge and make it accessible for more people!” and “Boo, cheap printed material which can help democratize knowledge and make it accessible for more people!” I guess it matters if you’re a glass half-full or half-empty type of person.

However, I also think, like it did back then, that it matters whether you are in power. I’m not generally one to worry about things over which I have no control, but it doesn’t take a rocket scientist to see how AI in the wrong hands of powerful or power-hungry people can be a recipe for disaster. And that is rightfully concerning.

The other problem seems to be with its ubiquity. We cannot opt out. The horse is long ago out of the barn. So then the question becomes, what do you do with it? I’ve been party to several conversations among academics who are tweaking assignments and syllabi in desperate (and to my mind, futile) attempts to stay one step ahead of students who use AI to “cheat.” However, some of them understand AI is here to stay and are trying to figure out ways to incorporate it into their teaching instead. I tend to agree with this approach because otherwise we are engaging in a game of Whack-a-Mole, and this is not a battle we can win.

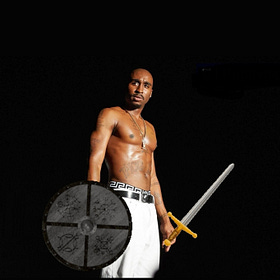

I think there may come a time when people who use AI to get through college with good grades or who use it to pass the Bar Exam, for example, will face a moment when they actually need the knowledge or skills they don’t have. When the emperor is found to have no clothes, then what? People who do hiring will no longer care what your GPA or SAT score was or what prestigious university you went to. None of it will carry any weight because it will be assumed you did not do the work but rather let AI do it for you, and so your fancy degrees won’t be worth the paper they’re printed on. We’ll have to come up with new ways to assess smarts and abilities. To separate themselves from the pack, some people will start using disclaimers to make overt statements that they haven’t used AI in an attempt to retain a shred of integrity and credibility. I have done this myself just recently with the piece I co-wrote about how Tupac could have been a viking. But I have the sinking feeling that these will end up being half-measures acted out in this frantic phase where we still think we can control this thing.

Tupac Could Have Been a Viking

This past couple weeks I took a little diversion from my usual research and co-wrote a piece which you can read here on Medievalist.net.

To be fair, I imagine like almost everyone by now, I, too, have used AI. I have found it helpful in compiling and condensing information such as when I want to quickly understand the technical difference between two things. So, I’m not a naysayer. And I realize that even though it seems only recently to have appeared everywhere out of nowhere, we have been relying on it in many forms of automation for a very long time. But that reliance goes almost unnoticed by most of us, and that is likely the insidious part. We have to balance the promises of upsides with the potential for downsides.

I’m old enough to remember when personal computers first came on the scene, and all the promises the tech overlords made to us about how they would free us up to enjoy more leisure time because we could get things done more quickly and efficiently. But for many, the promises quickly turned into nightmares when we came to rely on computers too much. Jobs were eliminated by new computing technologies, or at the very least we all ended up chained to our desks, working longer hours being more “productive.” Rather than enjoying the new found leisure time we were promised, we all ended up peasants in a feudal technoscape.

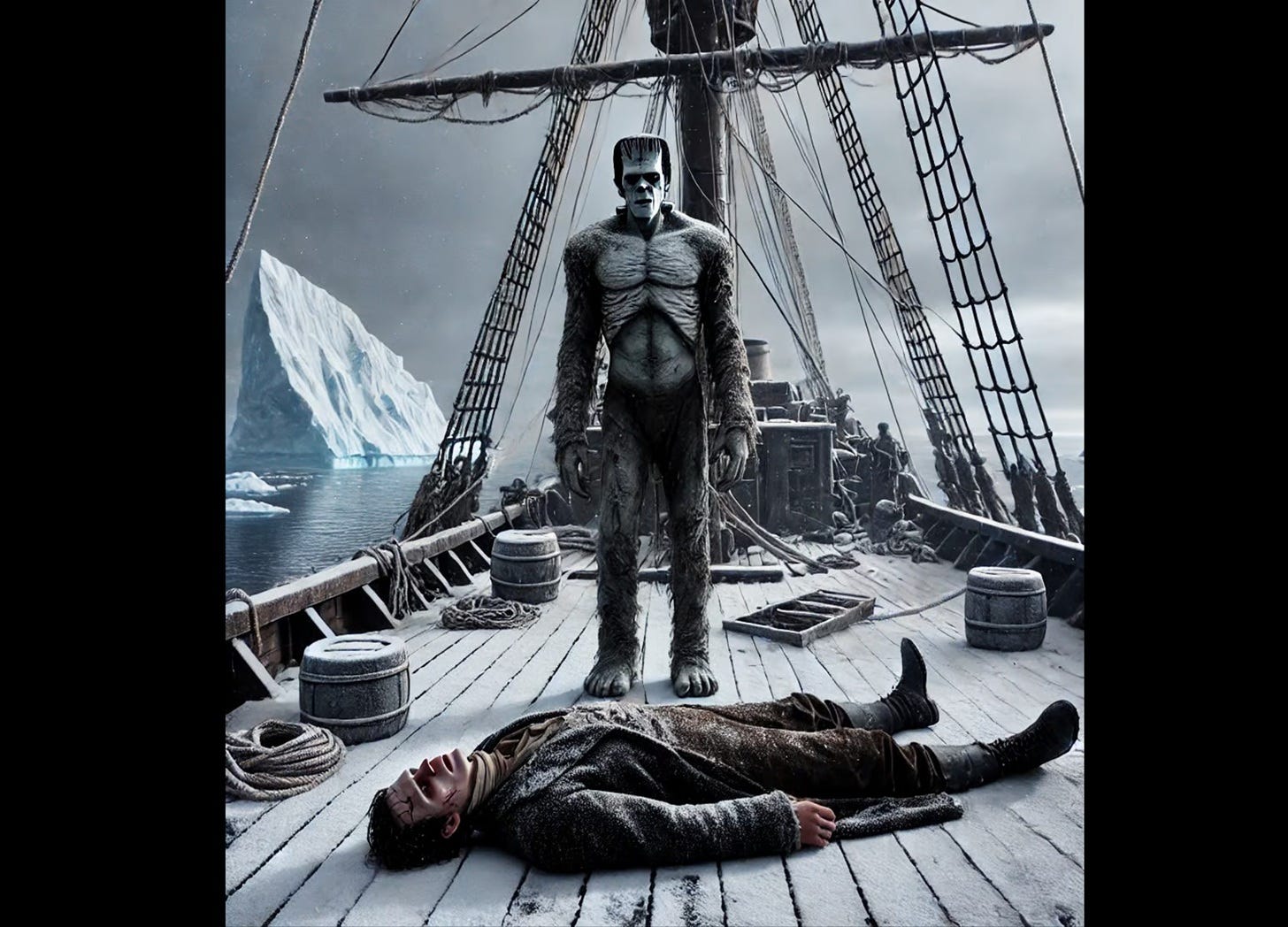

What it all really reminds me of is the 19th century gothic novel Frankenstein by Mary Shelley. I keep saying that we created this monster so ultimately we are in control of it. It seems odd to me — and admittedly this is probably a reflection of just how little I know about this technology — that we can create AI and then begin talking about it in terms of it attaining a life of its own, like it can become separate from us and sentient in its own right. We make it and then turn over our power to it. We do that. It doesn’t do it to us. Why? This surrender is probably the most puzzling part to me.

It does make me wonder if in 1,000 years, if there are historians or, indeed, people still around, what those historians will see when they look back at us from the same distance that I routinely examine the Viking Age. How will we have handled this situation? They will enjoy the benefit of hindsight being 20-20. But we are stuck because we can only live within our historical context, unable to step outside it to see it objectively let alone what the future will hold. Since we’re living it, we can’t know what we don’t know. And unless we invent some very accurate crystal ball technology, that will be only for future generations to see.

And what about the future? To make matters worse, like Musk many talk of this AI predicament turning into an existential crisis, as in some day AI may want to eliminate the human species. What. The. Fuck? I don’t know how this is possible. How does or would AI be able to create an intention like that unless we created it to do so? And if so, why on earth did we do that? This gets so far into the ethical and philosophical weeds that, in my opinion, there’s no denying this is one of those times in history where we have “technologied” ourselves into a corner by inventing something we were smart enough to invent but not smart enough to foresee the consequences, let alone our ability to deal with them. Oppenheimer comes to mind. What also comes to mind is just because you can doesn’t ergo mean that you should. But humans have never figured that out.

Ugh. So, as I said at the start, I’m not really sure what to think of all this. Fear because of what could happen but may never? Apathy because I can’t do anything about it anyway? Disgust because humans can be so smart and yet so stupid? Optimism because maybe AI represents some type of progress? Or rage because we may likely be the architects of our own demise?

If that dystopic existential crisis does come to pass, perhaps like Frankenstein’s monster, once AI has become a fully sentient being and its creator is dead it will feel sadness and guilt for the harm it has caused and vow to self-destruct. Last seen floating on an iceberg in the Arctic North, AI will be like Frankenstein, and we won’t really know how this story is to end.

And yeah, none of this post was made using AI. In case you were wondering.

Thanks, Terri! I have a theory on how this is all going to go, based on my experience in sales and marketing. To be successful in business, you have to have a defined market. Looking at LLMs and their defined markets (ie who they sell to), it’s becoming apparent that tech companies are selling their service to the people those services are starting to replace in the workforce. As AI replaces more workers, more people will cancel their subscriptions. As companies go bankrupt because AI replaces them (like BPOs, marketing firms, law firms, etc), the very people who would have bought subscriptions to the LLMs will no longer be able to, or want to. The few big corporations who will benefit at first from savings using AI will also go belly up because their target markets will also be out of work. And Because LLMs are owned by private corporations, their durability depends on the market. If the market collapses and Big Tech goes belly up, and they absolutely can, then AI may become a casualty of an economic crisis. So like you, I don’t see a future where AI takes over the world and kills all the people. It will file for chapter 11 long before that because it will have put all its own customers out of work. AI may be what frees us from our tech overlords—by accident.

"People who do hiring will no longer care what your GPA or SAT score was or what prestigious university you went to. None of it will carry any weight because it will be assumed you did not do the work but rather let AI do it for you, and so your fancy degrees won’t be worth the paper they’re printed on."

Rather than this leading to a collapse, I see it leading to some really unfortunate roads that sustain the system. But not in a good way:

Hiring managers can often ignore the degree and do a modicum of in-person testing during interviews to assess real reading comprehension and writing ability. Or specific skills. If they do that, it further lengthens job interview processes. It used to be one interview. Maybe introductory jobs will require a series of three interviews: Two controlled-condition tests, and maybe a third where you finally talk to human beings.

Which would suck and be pointless, but it's not like we're avoiding pointless things that suck with regard to technology right now.

The bigger issue: People don't get hired because of degrees, and so people aren't as likely to be hired with higher "interview scores" no matter what hiring process is adopted. People get hired because of networking, which is largely built on the base of the school you went to. Hiring managers get word of mouth, make a decision, and then begin the application process headed toward a conclusion that's already been made.

And if that's the case for managers, and we really don't care that much about people learning in college as much as who they make friends with, then why not just go whole-hog? Let's just drop the curriculums students want to cheat at anyway and make college a series of organized teambuilding exercises.

You can only take Binge Drinking once, but you can take Sophisticated Drinking multiple times since there are lots of snooty drinks to learn about. Polite Media Discourse for Polite Company can also be taken multiple times, especially after a new season of White Lotus. Maybe branch out with some physical activities like Paintball to be well-rounded. The really tough course would be Using AI to Write, which would consist of training on AI, not writing. Obviously. And would usually be taken the same semester as Binge Drinking.